How to Run AI on Microcontrollers with TinyML at the Edge

Deploying AI at the edge using TinyML enables real-time predictions on microcontrollers for maintenance, gesture control, and anomaly detection.

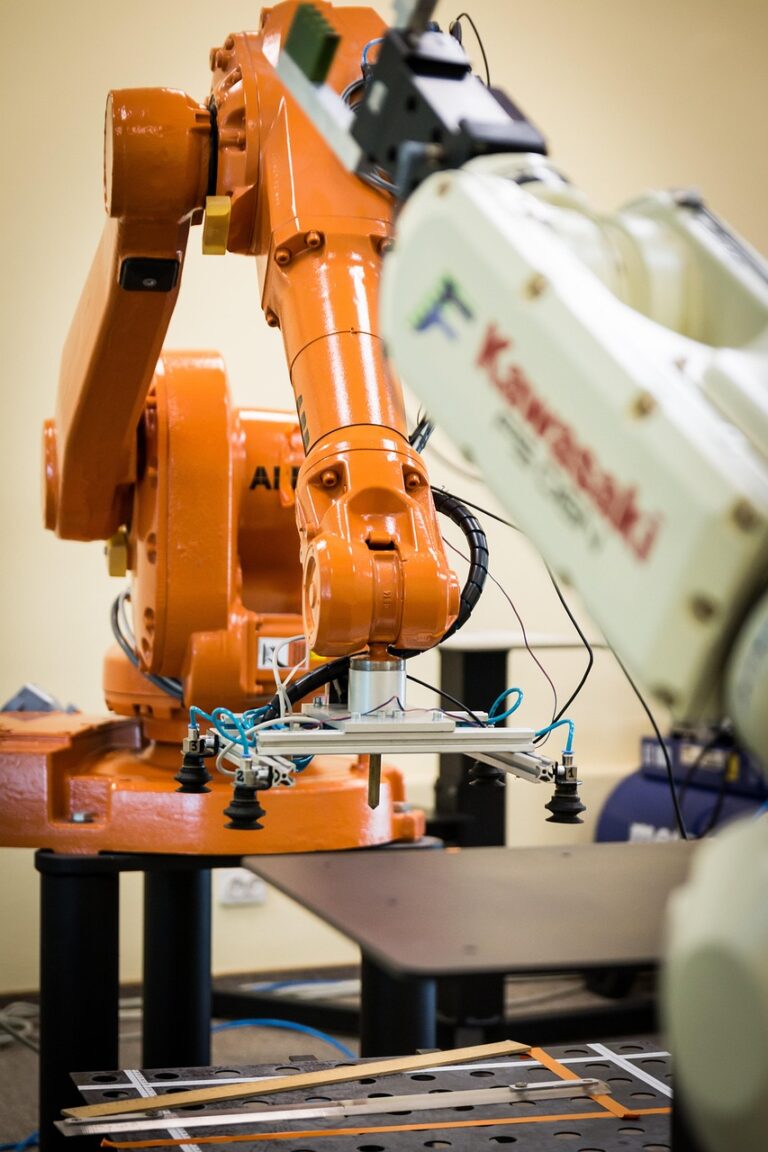

As industrial systems evolve toward greater autonomy and speed, there’s a growing demand for computing capabilities that can function directly at the source of data. This has fueled the rise of TinyML, an approach that integrates machine learning (ML) into microcontrollers and other ultra-low-power embedded systems. TinyML allows real-time insights on the device, eliminating reliance on cloud-based systems. In applications ranging from predictive maintenance in smart factories to gesture control in smart homes, AI at the edge is fundamentally changing how embedded systems are designed and deployed.

Why AI at the Edge Is Gaining Momentum

Traditional machine learning workflows typically involve collecting data at the edge, transmitting it to the cloud for analysis, and then sending back the results. While this architecture works in many cases, it introduces several critical challenges, such as network latency, increased power consumption, data privacy concerns, and dependence on constant connectivity. Edge AI addresses these issues by performing inference locally, on the device where data is generated. This brings several advantages: significantly reduced latency since there’s no need for round-trip communication; decreased bandwidth and power usage, which is crucial for battery-operated devices; enhanced privacy as sensitive data remains local; and uninterrupted functionality even in offline scenarios. These benefits make TinyML especially useful in industrial automation, remote monitoring, healthcare wearables, and environmental sensing.

What Is TinyML?

TinyML refers to the practice of running machine learning models on microcontrollers, hardware platforms typically characterized by minimal memory, processing power, and energy resources. Unlike traditional ML deployments that require powerful CPUs or GPUs, TinyML enables model inference on devices with as little as 256 KB of RAM and clock speeds under 100 MHz. Yet despite these constraints, TinyML has proven capable of handling tasks such as gesture recognition, sound classification, and anomaly detection.

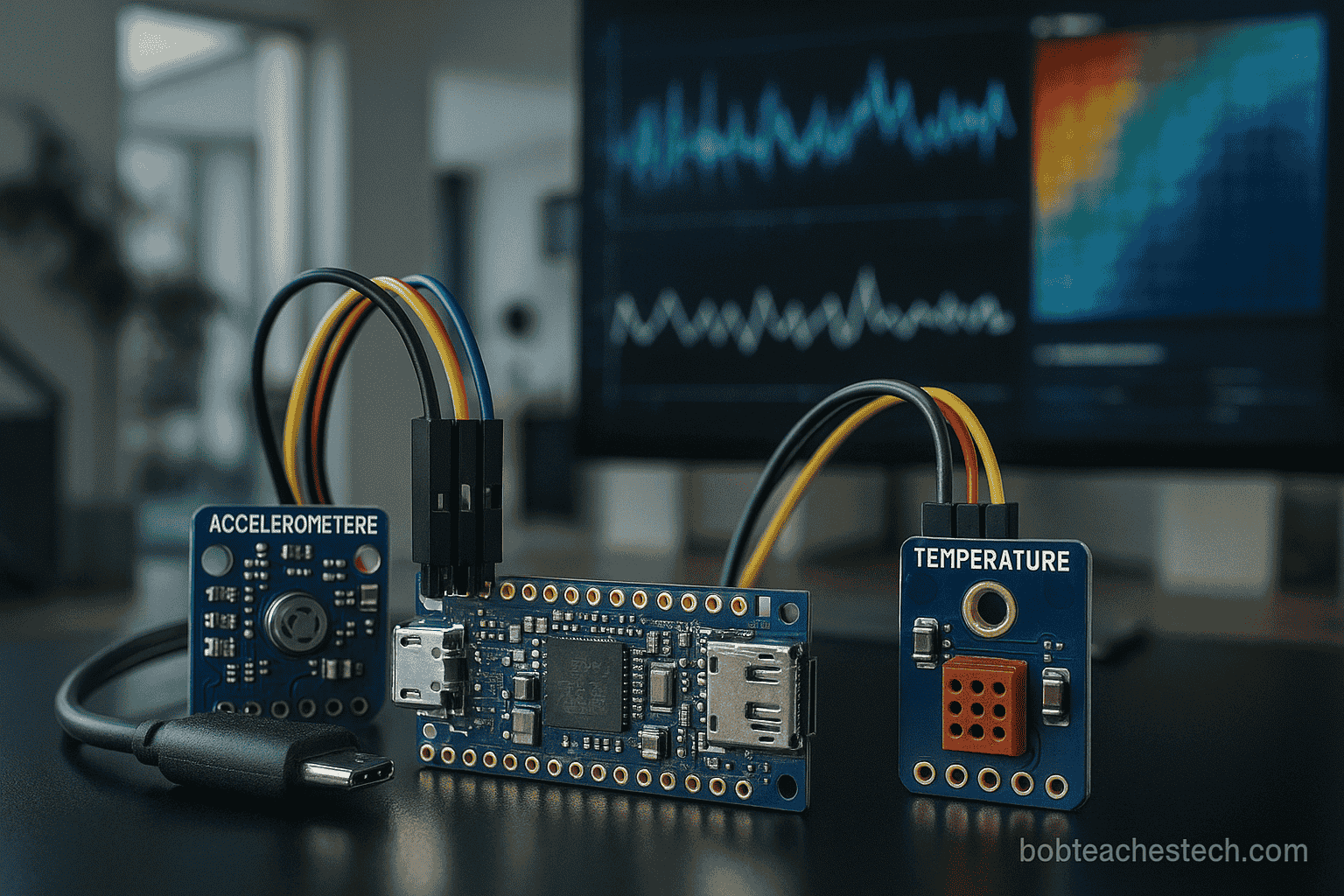

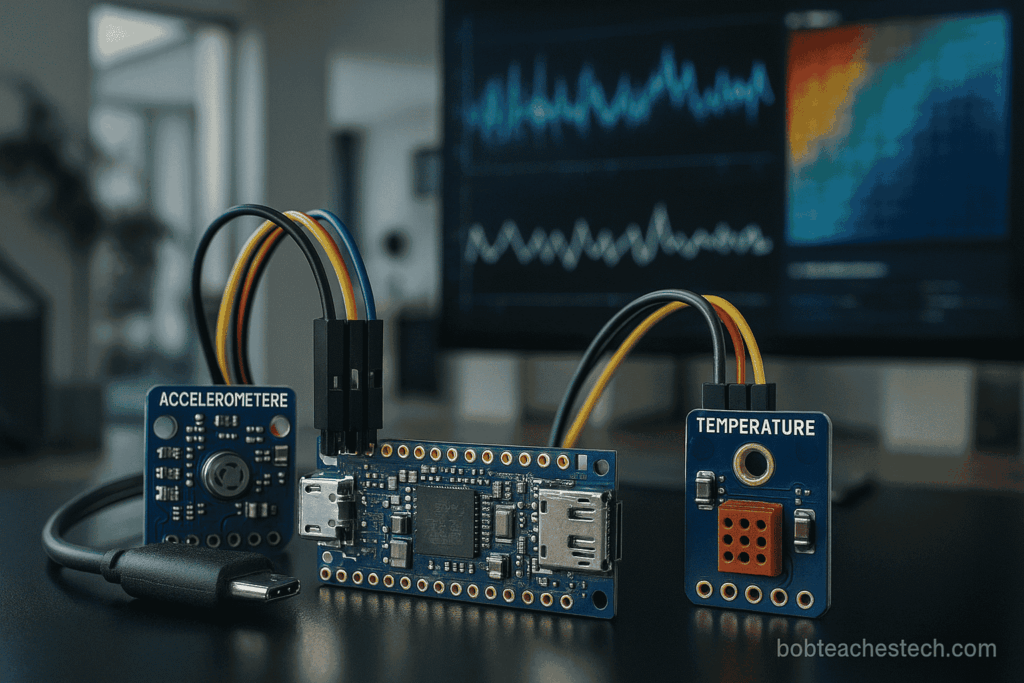

Microcontrollers well-suited for TinyML include the Arduino Nano 33 BLE Sense (based on the nRF52840 chipset), the STM32F4 series commonly used in industrial control, and the ESP32 S3, which even includes AI acceleration support. These platforms provide a good balance between cost, power efficiency, and peripheral support for sensors, making them ideal for edge intelligence projects.

Frameworks and Tools for Edge AI

One of the most widely adopted frameworks for TinyML is TensorFlow Lite for Microcontrollers (TFLM), a version of TensorFlow explicitly designed for devices with very low resources. TFLM enables developers to train models on a PC or in the cloud and then deploy highly optimized, quantized versions of those models to microcontrollers. The typical workflow starts with collecting sensor data, training a model using tools like Keras or TensorFlow, then applying post-training quantization to reduce the model size from floating point (float32) to integer (int8). The model is then converted into a C array (model_data.cc) that can be compiled directly into the microcontroller firmware.

Besides TFLM, several other tools support the TinyML development pipeline. Edge Impulse offers an end-to-end platform that simplifies data collection, model training, and deployment to embedded devices, all from a user-friendly web interface. CMSIS, NN, developed by ARM, provides optimized neural network kernels for Cortex-M processors, enhancing the runtime performance of inference. Developers also experiment with tools like uTensor and MicroTVM depending on project requirements.

Real, World Applications of TinyML

One powerful use of TinyML is predictive maintenance. For example, by mounting a low-cost accelerometer such as the ADXL345 onto a motor, vibration patterns can be continuously monitored. These raw signals are then processed using techniques like Fast Fourier Transform (FFT) to extract frequency and domain features. A trained machine learning model, often a lightweight decision tree or convolutional neural network (CNN), can classify whether the motor is operating normally or showing signs of imbalance, misalignment, or bearing faults. With inference running locally on a microcontroller, alerts can be generated instantly without relying on remote diagnostics.

Another compelling application is gesture recognition. Devices equipped with Inertial Measurement Units (IMUs) can capture hand or body movement data. For example, a microcontroller connected to a 3-axis accelerometer and gyroscope can be trained to detect gestures such as swipes, taps, or rotations. These gestures are recognized using time series models like Long Short-Term Memory (LSTM) networks or 1D CNNs. Once deployed, the system can enable intuitive interaction with devices, such as adjusting light brightness with a wave of the hand.

TinyML is also used in anomaly detection scenarios. For instance, monitoring temperature or current data in industrial systems can reveal unusual patterns that indicate equipment malfunctions. Autoencoder neural networks trained on normal operational data can run locally to detect when incoming data significantly deviates from expected patterns. In simpler setups, statistical models or rule-based thresholds may suffice. These real-time detections enhance system reliability while minimizing false alarms.

Optimization Techniques for Efficient Deployment

Due to the resource-constrained nature of microcontrollers, several optimization techniques are essential for deploying functional TinyML models. Quantization is a primary strategy, reducing the model’s precision from 32-bit floats to 8-bit integers, thereby shrinking model size and accelerating inference without significantly sacrificing accuracy. Pruning further compresses the model by eliminating unnecessary weights or neurons, while fixed-point arithmetic replaces computationally intensive floating-point operations with faster, simpler alternatives. Additional optimization methods include operator fusion, which merges consecutive operations to reduce memory access overhead.

An optimized 8-bit quantized model for a gesture recognition task, for instance, can operate within 32 KB of flash memory, use less than 16 KB of RAM, and deliver inference results in under 10 milliseconds on a 64 MHz Cortex-M4 processor. These performance metrics make it feasible to embed intelligence in low-cost hardware without offloading data or processing.

Future Trends and Industrial Implications

The TinyML landscape continues to evolve rapidly, with several emerging trends that promise to expand its capabilities. Federated learning is gaining interest for edge scenarios, allowing microcontrollers to train local models and periodically contribute updates to a shared global model, all while keeping raw data private. Advances in sensor hardware and AI accelerators, such as the Syntiant NDP101 and Himax WE I Plus, are integrating neural processing units (NPUs) into ultra-low-power chips, pushing performance boundaries even further.

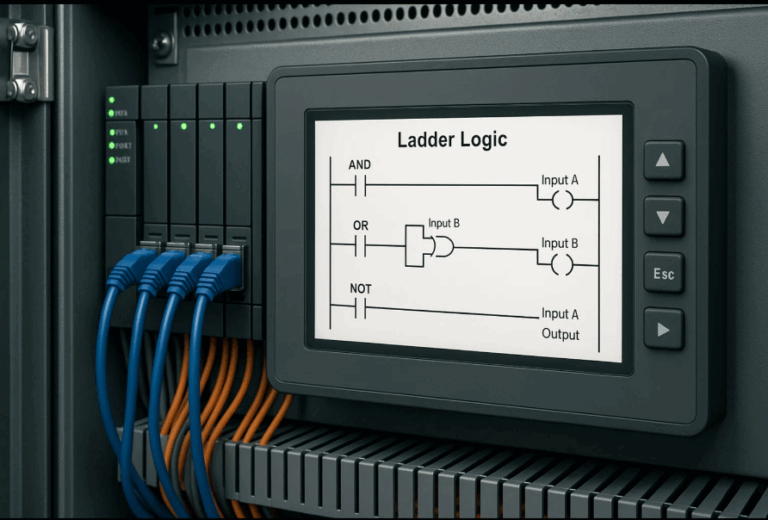

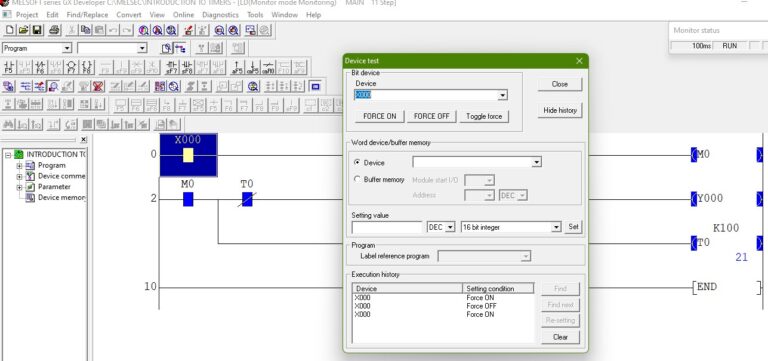

In industrial settings, the combination of TinyML with edge PLCs, SCADA systems, and HMIs introduces new levels of adaptability and intelligence. For example, a self-calibrating PLC input module could use embedded ML models to adjust thresholds or detect sensor drift in real-time. The integration of TinyML with communication technologies like LoRaWAN allows for broad, area, low-power deployments that include both sensing and intelligence.

TinyML is no longer just an academic exercise; it’s a practical, cost-effective strategy for building responsive, reliable, and energy-efficient edge systems. As frameworks and hardware continue to mature, engineers and developers will find increasing opportunities to infuse AI directly into their embedded designs.